Most of the writers I know are pretty worried about AI. Understandably so. As Ethan Mollick is fond of reminding us, you are currently using the worst AI that you ever will use. And already, it’s not bad. It can definitely produce plausibly fluent prose. The Chinese government is making it work with fewer Nvidia chips, removing both some of our safeguards and some of the environmental consequences. Somehow, blithe reassurances that people complained about the printing press too when it was first invented really aren’t that reassuring!

But I wanted to investigate its capabilities as a creative writer more thoroughly, so I learned some Python and started fine-tuning LLMs. My first exercise was one suggested by the writing software Novelcrafter. You create a spreadsheet with one column that is just the prompt. In my case, it was, “Given a story beat, write 500 words of a scene in the style of Shannon Chamberlain.” Then, in the third column of three, I imported 500 words of my own writing. I did this about 50 times.

Then the interesting part: I put my writing back into ChatGPT and said, “Rewrite this in your own style.”

When you fine tune an LLM, what you’re really trying to do is get it to take the output it would normally create and subtly shift it to producing something more like what you want. If you know anything about LLMs at all, you know that how they work is essentially as a highly sophisticated predictive model for prose. (In one of Mollick’s recent posts, he goes behind the scenes of OpenAI’s reasoning model, o1, to see how AI thinks through a creative problem. It’s highly worth reading that post, and experimenting with this yourself, if you haven’t done so already.)

But what this means is that you have to have to help it recognize what it would ordinarily do, first, and then what you want it to do instead. So, this was the example from my own writing that I entered:

At the sound, Aunt Nora looked up. So did Hercule, the enormous pile of blonde fur lounging on a battered striped rug next to the adobe fireplace. He really wasn't supposed to be inside yet--the sun was just rising over the mountains, and the predators lingered late in the foothills--but Hercule was, like his namesake, a fastidious animal who was fond of a good breakfast. Technically, he was Hercule IV. All of his predecessors in the role of Official Guard Dog were much the same way.

Besides, there was always Shearlock. And Shearlock never punched out early.

There were many disconcerting things for Mel about being back here: in Santa Maria Aguamiel, at Agatha’s Acre. And one of them was how little the place ever seemed to change. She had once found this comforting, even amusing. Aunt Nora always had at least one Great Pyrenees named after Hercule Poirot. There were always spring crias, bleating at their mother's bellies and ready to shyly brush your hand with their milk-damp noses. Even the weather never seemed to change. Climate change came slowly to a plateau standing 8,600 feet above sea level. But this had all been one thing when Mel was Santa Maria's golden child, blowing in at Christmas and for two weeks in the summer, fresh from some new adventure, some new prestigious academic prize. Now, it felt like she was stuck in a time warp, her own perpetual Groundhog Day that she could escape if she only learned the right lesson. But what was it?

Aunt Nora--her mother for all intents and purposes ever since she'd been her fifth grade teacher--wasn't helping, even though she was trying.

"Three people are at a party. They drink scotch from the same decanter. Later that night, one of them is taken to the hospital with symptoms of severe poisoning. But when the scotch in the decanter is tested, no poison was found. How was the third man poisoned?" asked Nora, as if she were asking Mel about her plans for the day.

This had been their little ritual when Mel was young, still in school, and a teenager who had trouble waking up at 5:30 to catch the bus to the only decent prep school within 100 miles of Santa Maria Aguamiel. A riddle extracted from Aunt Nora's stack of Golden Age mysteries was the only thing--besides a cafe au lait with chile on top--that would fully wake Mel's hibernating brain.

Here’s what it returned, when I asked it to write in its style:

The rising sun cast its golden rays over the rugged foothills, but the imposing figure of Hercule, a majestic Great Pyrenees, already commanded the worn rug before the crackling fireplace. Breakfast was imminent, and Hercule, like his legendary namesake, was a creature of habit. His presence defied the lingering nocturnal predators, for his sense of duty, passed down through generations of Herculean predecessors, was unwavering.

The familiar surroundings of Agatha's Acre in Santa Maria Aguamiel brought a wave of disquiet over Mel. Time seemed to stand still in this high-altitude haven. The endless cycle of spring births, the predictable weather patterns, and the ever-present Hercule – all were echoes of a past that now felt more like a cage than a comfort. Once a source of pride and accomplishment, Santa Maria now loomed as a symbol of stagnation, a puzzle with a missing solution.

Aunt Nora, her unwavering maternal figure, did little to alleviate the unease. "A perplexing situation arises at a social gathering," she began, her voice cutting through the morning calm. "Three individuals partake in scotch from a shared decanter. As the night progresses, one of them falls gravely ill, exhibiting symptoms of severe poisoning. Yet, upon analysis, the scotch reveals no trace of toxins. How, then, was the third man poisoned?"

The riddle, a relic from Aunt Nora's cherished collection of Golden Age mysteries, hung in the air like a challenge. It was a familiar ritual, a nostalgic echo of Mel's teenage years when such enigmas served as the catalyst for her intellectual awakening.

Just for funsies, I asked it to provide a summary comparison of the difference between my prose and its prose.

Your Prose:

More grounded and character-driven: You focus on establishing the characters (Mel, Aunt Nora, even Hercule the dog) and their relationships. There's a strong sense of Mel's emotional state and her history with the setting.

Subtle world-building: You weave in details about the location (Santa Maria Aguamiel) and its unique atmosphere without explicitly stating everything. This creates a more immersive experience for the reader.

Emphasis on internal conflict: The main conflict seems to be within Mel herself, her struggle with returning to a place that feels both familiar and stifling.

Conversational and engaging: Your writing has a natural flow and uses vivid language that draws the reader in.

AI Prose:

More descriptive and atmospheric: I tend to focus on creating a strong sense of place and mood through detailed descriptions.

Slightly more formal tone: My language is generally more precise and less colloquial than yours.

Focus on the mystery: I highlighted the riddle and the "perplexing situation" perhaps more than your original, leaning into the AI's tendency to prioritize information and problem-solving.

Less emotional depth: While I tried to capture some of Mel's unease, my version likely lacks the nuanced portrayal of her internal conflict present in your writing.

It’s interesting to me that the base model can identify the differences (I might characterize them as issues) between our styles. I decided to try to use this to give it a better prompt, this time starting a different query to ask it to write in the way that it characterized my style. It came up with more or less the same output as it had before, with a couple of very minor word changes but the same kind of overblown atmospherics and reaching for Latinate words when good old English would do. No noticeable improvement.

This highlights for me the limitations of prompt engineering, at least when it comes to creative writing. Until writers start learning en masse to fine tune LLMs, I’m not sure that AI is going to offer much of a productivity boost. And fine-tuning assumes that your prose is good in the first place. Or at least that it’s good enough to sell.1

With my fine-tuned model, using a base of 4o in Playground, the AI does a bit better. I gave it a fairly detailed scene beat from a different novel and it came up with this:

The cold had teeth.

Sharp, voracious, and merciless. Incisors that sliced, molars that ground down to the bone, and canines—yes, canines most of all.

The National Weather Service had reported a temperature, a wind chill, a probability of snow. What it had failed to capture, however, was the predatory nature of the night itself, the way it lunged and bit. Julia fought her way forward, or rather, she fought against the snow, as it swallowed her steps and stole her footing. One foot clad in a ballet flat, the other bare, she might as well have been walking through a set of jaws.

She had dressed sensibly for the day—layers, a t-shirt beneath a button-down, topped with a fuzzy sweater. Her knee-length skirt had been a poor choice in hindsight, but she had compensated with thick, fleece-lined tights, purchased during an end-of-season sale. A sale that, as it turned out, had signaled the end of winter somewhere else.

If you knew Julia’s office in Carson Hall, you knew about the two coats behind her door. One was a tweedy, professional affair, suitable for campus tours with prospective students, donors, and their hovering parents. The other was a long, heavy-duty parka in a drab shade of military utility, purchased after an overconfident winter nearly bested her once before. The L.L. Bean website had promised her it was engineered for temperatures as low as -40°F.

But in her rush, she had reached for the wrong one. She had assumed she’d be back within the hour, returning to the golden pools of light cast by the desk lamps, to the aging but beloved rug, the sagging but comfortable armchair, the compact space heater humming beneath her desk.

That, of course, is not what happened.

Even now, from the comfort of my study, a fire crackling in the grate, a cat curled warm and purring in my lap, I shudder to think of it. The cold, gnawing at her exposed skin, biting deeper with every second. Hypothermia is a cruel and arbitrary thing. It does not follow a predictable script. The Nazis at Dachau, in their grim pursuit of knowledge, never found the precise threshold at which the body succumbs. Women, with their higher fat percentage and lower surface area, often fare better than men. A two-year-old child in Saskatchewan once wandered into the frozen night and was found with a core temperature of 57 degrees—she lived. But in 1964, three fit, strong men, dressed for a brisk walk on a British moor, collapsed and died before the temperature had even dipped to freezing.

Julia, barefoot and bleeding into the snow, had no such certainty.

There’s still a bit of AIism here—too many adjectives, too much reaching for the predictable word, too much…uh, much—but it’s better. It even came up with the same first line that I did in my original draft, although mine proceeds in what I, at least, judge to be a better and less predictable way:

Incisors, molars, and canines. Canines for sure.

Still, I could see myself being able to edit and reshape the fine-tuned version faster than I could write something new, provided that I knew what was going to happen in the scene. I’ve had better luck using AI as a plotting collaborator, perhaps because my plotting is weaker than my prose style. [Insert Nabokov joke about fancy prose style here.] I had to input 50 examples of my prose into my fine-tuned LLM to get it to this level, and given more time, I’d like to see if doubling or tripling the examples would improve the fine tune noticeably.

But that got me thinking. Could I fine-tune an LLM to produce one of my favorite literary devices, the well-engineered plot twist?

There’s a book on the subject of the well-engineered plot twist, by a Carnegie Mellon cognitive scientist, called Elements of Surprise. This is definitely a book that’s written for academics and not the general public, and I’m not sure how useful it is to the latter. But it does provide a helpful definition of “good” plot twists. Rephrased for people who don’t much relish struggling through Derrida, it’s something like, “A good plot twist is one that you don’t see coming, but on a second read, you definitely think you should have seen coming.” So, in other words, it doesn’t bring characters you haven’t met onto the stage, or use information that you couldn’t have possibly known. It follows the 10 commandments of fair play that Ronald Knox drew up for the detective novel.

So, still using the three-column spreadsheet format that I’d used to train the LLM on my style, I came up with the prompt: “Construct a well-engineered plot twist that subverts reader expectations following the rules of fair play given a scenario where they have the specified expectations.”

Now, obviously this differs from the above experiment in being considerably less straightforward and requiring two columns of input from me, rather than one from me and one from the original base model. And maybe that’s why my results were so poor. But to give an example of a famous twist that I hope won’t be too spoilery at this point in movie history, here was my Column A:

The psychiatrist who helps the boy cope with seeing ghosts is a living person who is visible to his wife, the boy’s mother, and all other humans in the story.

And, of course, the plot twist is:

The psychiatrist is actually a ghost himself, and only the boy can see him. He never directly speaks to or interacts with any other character.

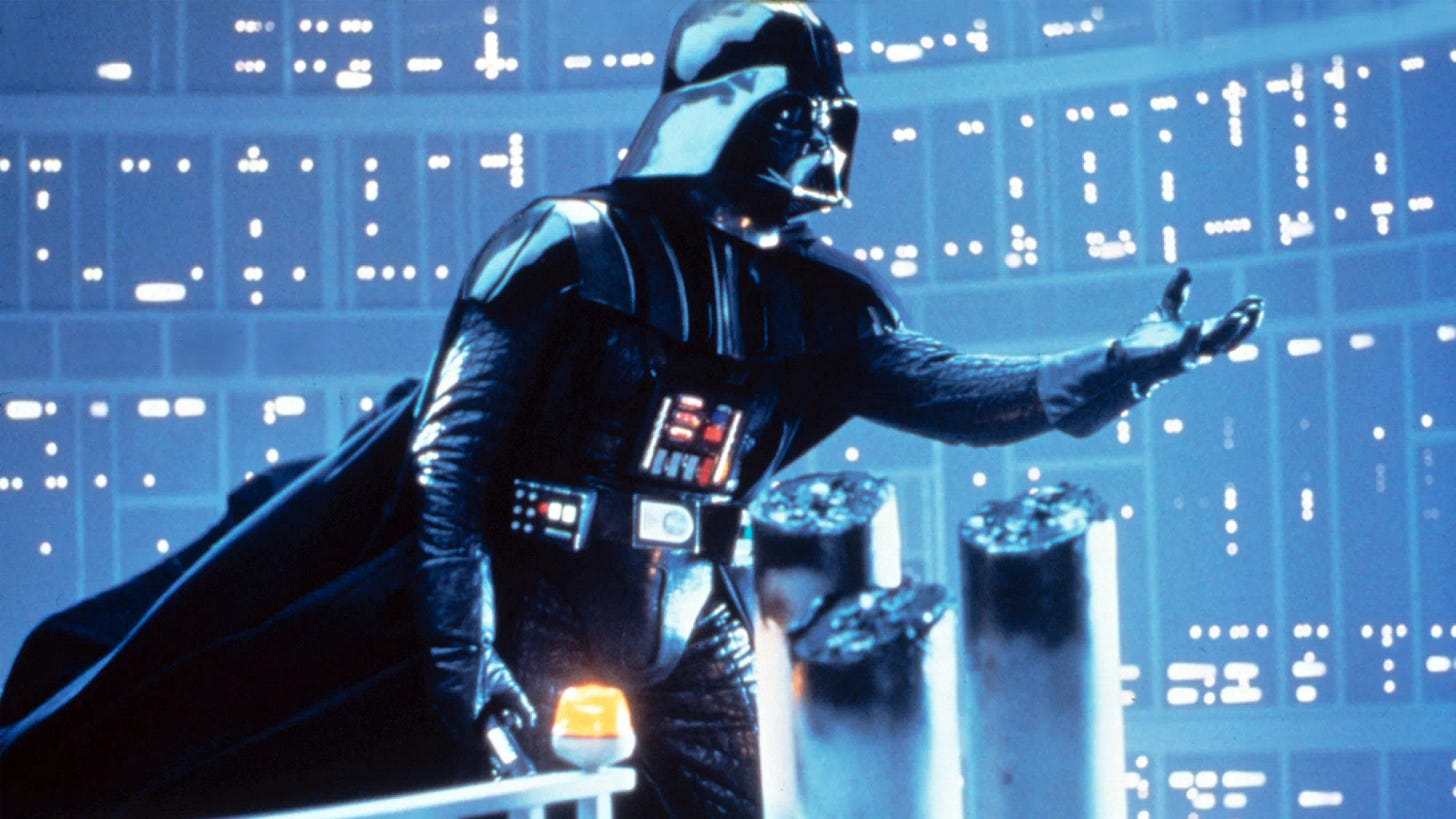

I even used AI a bit to help me come up with the clues that supported the twist to add to the spreadsheet, and I think it did a good job, for the most part, and in one case picked up something I’d never noticed about the first Star Wars movie that prepares for the twist at the end of The Empire Strikes Back.

I was able to come up with about 50 examples from literature and film of my favorite well-engineered twists. I uploaded them and fine-tuned my model.

Yikes.

The fine tune’s problems fell into one of two categories: Either it came up with a totally predictable solution to the plot problem, or it hallucinated wildly, introducing new characters or ideas that didn’t appear in the context that I gave it (outlines, story beats, and character profile lists), breaking the fair play rules with abandon. I even tried inputing the fair play rules in the context window, but as far as I could tell, it totally ignored them. I tried interacting with it to correct the hallucinations, drawing its attention to the rule that it broke with its proposed solution, and it would agree (in sycophantic AI fashion) that it broke it, but then just introduce a new species of the same problem.

I guess this should have been, uh, predictable. (Womp womp.) I think what I was doing was trying to get a prediction machine to predictably predict the unpredictable. With all of the caveats about my lack of skill as a programmer, I think I can say that a truly well-engineered plot twist is probably outside of AI capabilities at the moment. And when I think about it, there is something so human about wanting not just a surprise, but a surprise that you can experience retrospectively as the joy of being fooled. It has something bittersweet about it: you were tricked, but you liked being tricked. You paid money to be tricked.

So, writers, get better at plot twists. It’s at least one thing standing between us and creative oblivion!

And Fifty Shades of Grey sold a bazillion million copies, so that’s probably not as high of a bar as I think it is.